Gemini AI Models: Gemini 1.5 Pro and 1.5 Flash, two of Google’s most prominent generative AI models, have received a lot of praise for their capacity to handle and analyze massive volumes of data, including summarizing long documents and searching scenes in film footage. New evidence, however, indicates that these models fail to deliver as promised.

Gemini AI Models Struggling with Long Contexts

According to two research that looked at how well Google’s Gemini models deal with big datasets, 1.5 Pro and 1.5 Flash frequently have trouble providing accurate answers regarding lengthy texts. Only 40% to 50% of the time did the models get the questions right in the document-based tests. “While models like Gemini 1.5 Pro can technically process long contexts, we have seen many cases indicating that the models don’t actually ‘understand’ the content,” said Marzena Karpinska, a postdoc at UMass Amherst.

Context Window Limitations

The amount of input data that a model can consider before generating an output is called its context window. According to Google, the latest Gemini versions can manage 2 million tokens, which is about 1.4 million words, two hours of video, or 22 hours of audio. The models’ capacity to reason and provide answers across such vast contexts is, however, constrained, as demonstrated by practical tests.

Research Findings

In one study, researchers tested the models with true/false statements about recent fiction books. Gemini 1.5 Pro answered correctly only 46.7% of the time, while Flash managed just 20%. These results suggest that the models are less effective than a random guess at understanding long texts.

A second study focused on the models’ ability to reason over videos. The researchers created a dataset of images and questions, finding that Flash struggled significantly. In one test, Flash correctly transcribed only 50% of handwritten digits from a series of images, with accuracy dropping to 30% for more digits.

Overpromising Capabilities

Both studies indicate that Google may have overpromised the capabilities of its Gemini models. While other models, like OpenAI’s GPT-4 and Anthropic’s Claude 3.5, also performed poorly, Google has heavily marketed the context window as a key feature.

Industry Implications

Generative AI is under increasing scrutiny as businesses and investors grow frustrated with the technology’s limitations. Surveys by Boston Consulting Group found that half of the C-suite executives surveyed do not expect substantial productivity gains from generative AI and worry about potential mistakes and data compromises.

Calls for Better Benchmarks

Researchers like Karpinska and Michael Saxon of UC Santa Barbara argue that better benchmarks and third-party critiques are needed to validate claims about generative AI capabilities. Current benchmarks, such as “needle in the haystack,” often cited by Google, only measure information retrieval and not the ability to answer complex questions.

- Online Student Seva Portal (OSSP.IN) : Education & Career Updates

- Sarkari Tab: Complete information about government tabs in easy language

- How to detect fake Sapphire Gemestones | 4 Ways to Determine if a Sapphire is Real

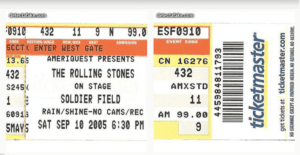

- How to Spot fake Ticketmaster Event Tickets? How do you check if my Ticketmaster tickets are real?

- How to detect fake Pandora Beads and Charms | Identifying Authentic Pandora Products

- How to detect fake Playstation 4 / PS4 Controllers | 9 Ways to Spot with Images

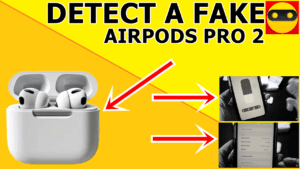

- How to Detect Fake AirPods Pro 2 with Screenshots

- How to detect fake Apple iPod Touch? Know the authentication of your ipod

- How to Spot fake Nike Air Jordan XIV (14) Retro in 2023 (All Colorways)

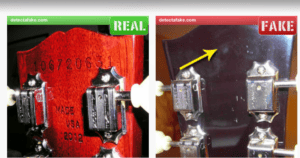

- How to Spot fake Gibson Guitars Is Your Music Instrument is Authentic?

- How to Detect Real vs Fake Diamond Chain with Screenshots

- How to detect fake Tiffany & Co. Jewelry | Tiffany & Co. Jewelry: How to Spot the Real Deal

- How to detect fake Super Nintendo Classic Edition | 6 Ways to Spot with Images

- HoHow to detect fake: Nike Air Jordan XXI (21) Retro in 2023 (All Colorways)

- How to detect fake Silver Bullion Bar | How To Tell The Difference Between Real And Fake Silver

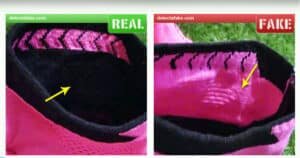

- How to detect fake Nike Air Max 2014 | Step by Step with Images

- How to detect fake: Nike Air Max 2015 | Step by Step with Images

- How to detect fake: Nike Foamposites | 10 Steps (easy)

- How to detect fake: Nike Mercurial Superfly 6 Very Easy Ways!

- How to get IRS 4th Stimulus check: Check Your Elegibility and Documents Apply Now